Intro

__________________________________________________________

The end user of a phenomenon shader are usually lighters in a pipeline environment so as a shader artist/developer, it would be helpful to think in terms of how a lighter will use this shader. The phenomenon does not replace the shader graph. However, it does simplify the user interface of the shader graph into something that is easier for the lighter. This is also currently the pipeline direction Renderman Studio 3 is going. Our previous RMS pipelines exposes the full shader tree to the lighter, which is 1. unwieldy to the lighter 2. unwieldy to the hardware. But unlike renderman, there are no performance gains in mentalray.

I will be making two mental ray phenomenon shaders, first will be the water droplet shader, and the second will be the rim shader, which will be exercise. I can use these phenomenons later on in my fruit shader series. Other resources for making phenomenon shaders can be found here,

Key Points

__________________________________________________________

- Splines, color ramps, maya layered shader/texter and any other nodes with arrays will NOT work in phenomenon

- Use MentalRay equivalent nodes when possible

- Phenomenizer does not work with maya nodes

Determining User Settings__________________________________________________________

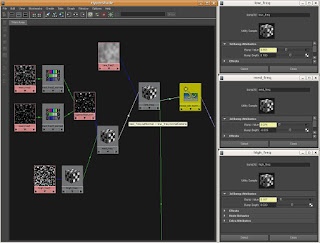

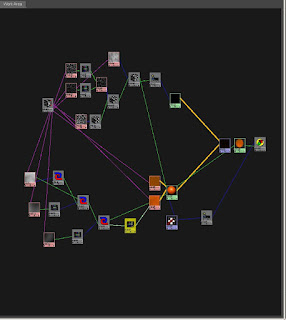

First, a review my shader graph.

|

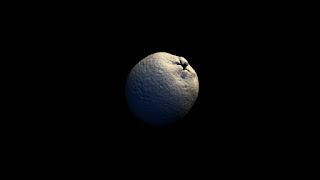

| Overview |

|

| Detailed Droplet Tree |

Here are the settings I have deemed important enough for customizability.

small droplet

- cell size

- density

- spottiness

large droplet

- diffuse weight

- reflection color

Reformatting the Shader Tree

__________________________________________________________

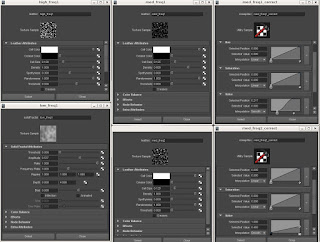

Looking at my shader graph, notice there are several remapHSV nodes in there, these are going to be difficult to implement in a phenomenon. As getting array inputs(the spline graph) has not been proven to work(yet?). However, I will just work around it for now, substituting remapHSV with a contrast node.

|

| Replace Remap with Contrast |

Here, I just tweak the contrast node to mimic what the remaphsv node is doing. The other remaphsv nodes define the crossectional shape of the droplet by customizing the gradient falloff of the noise map.

|

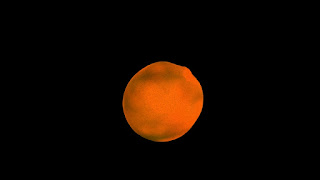

| Reworking and Testing the droplet |

By removing these remaphsv nodes, the bump would look slightly off, but only noticeable in extreme closeups. I retweaked threshhold level of the leather noise node to match the values of my original. Hence, I will add the threshhold controls to my droplet UI. A quick way to test is just use surface shaders or for mental ray nodes, a mib_lambert. I also removed the layered texture node and replaced it with a mib_color_mix. As seen above, a quick comparison of each node and the final texture output.

|

| Cleaned up |

Writing the Phenomenon

__________________________________________________________

I will begin implementing the above network into a phenomenon. The first thing to do,

declare phenomenon

color "droplet_phen" (

)

version 1

apply material

end declare

This is the basic phenomenon declaration. Next, declare the root node, which should be the end result node.

shader "dropletSurface" "maya_surfaceshader" (

"outColor" = "mia_material1.outValue",

"outTransparency" 0. 0. 0.,

"outMatteOpacity" 1. 1. 1.,

"outGlowColor" 0. 0. 0.

)

shader "shadingEngine" "maya_shadingengine" (

"surfaceShader" = "dropletSurface.outColor",

"cutAwayOpacity" 0.,

"alphaMode" 0

)

root = "shadingEngine"

The root node is named shadingEngine, which is a maya node with the name "maya_shadingEngine". Why am I using maya_shadingEngine? It's explained

here, which states,

"All maya built in mentalray shaders does not use a simple “color” as an output but a “struct”, a user defined output structure. Because root expects a color, it does not know how to evaluate this thing and this causes this error:...

...One shader that provides an color output instead of a struct is the shading group shader."

So here is the basic structure of a phenomenon shader, each node is defined as

shader "shader_instance_name" "mayabase_name"

followed by its variable properties. To find out the exact name and variables of a node, check inside the mentalray/include directory of the maya installation and find the following files,

- mayabase.mi - maya nodes

- base.mi - mentalray nodes

- architecture.mi - mia

- subsurface.mi - misss

Unlike the aforementioned mi files, the type of each property does not need to be declared, we are only assigning values to the property.

Before I start importing all the nodes into my phenomenon file, I declare the the settings that allows the user to access the shader.

color "droplet_phen" (

scalar "diffuse_weight", #:default 0.2

color "reflection_color", #:default 0.9 0.9 0.9

scalar "sm_droplet_cell_size", #:default 0.125 shortname "smdr_size"

scalar "sm_droplet_density", #:default 0.3 shortname "smdr_density"

scalar "sm_droplet_spottyness", #:default 0 shortname "smdr_spot"

scalar "lg_droplet_cell_size", #:default 0.6 shortname "lgdr_size"

scalar "lg_droplet_density", #:default 0.1 shortname "lgdr_density"

scalar "mask_frequency", #:default 13

scalar "mask_time", #:default 0

vector "mask_bias", #:default 0.6 0.6 0.6

scalar "bump_depth", #:default 0.5

transform "sm_droplet_placement",

transform "lg_droplet_placement",

transform "mask_placement"

)

The three transform variables does not have a default value as it will differ from scene to scene. I left it open so I can manually connect a place3dtexture node into the transform slot. I will then link the interface to the three 3d textures I will be using later on. One can specify default values of a transform variable, its a 4x4 matrix, separated by commas(IIRC).

From the tip to the root, import the nodes into the phenomenon file.

shader "small_droplets" "maya_leather" (

"cellColor" 1. 1. 1.,

"creaseColor" 0. 0. 0.,

"cellSize" = interface "sm_droplet_cell_size",

"density" = interface "sm_droplet_density",

"spottyness" = interface "sm_droplet_spottyness",

"randomness" 1,

"threshold" 0.6,

"creases" 0,

"filter" 0,

"filterSize" 0. 0. 0.,

"filterOffset" 0,

# "blend",

"wrap" 1,

"invert" 0,

"alphaIsLuminance" 0,

"colorGain" 1. 1. 1.,

"colorOffset" 0. 0. 0.,

"alphaGain" 1,

"alphaOffset" 0,

"defaultColor" 0.5 0.5 0.5,

"placementMatrix" = interface "sm_droplet_placement",

"local" 0

)

There are basically four things I'm doing from here on out,

- create a new node from the corresponding node in the shader tree

- copy settings from the original shader tree into the phenomenon

- assign variables to the interface

- assign variables to the output of another node

Take a look at the following,

shader "mask_contrast" "maya_contrast" (

"value" = "small_droplet_mask.outColor",

"contrast" 100. 100. 100.,

"bias" = interface "mask_bias"

)

- I created a contrast node, and named it "mask_contrast"

- I set the contrast to a 100 in every channel(I just need one actually)

- I assigned the bias to an interface item

- I assigned the input of contrast to be the noise map

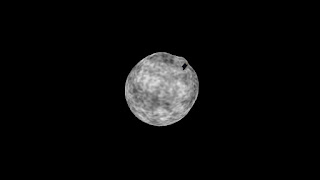

Every node is a repeat of the above process. Once every node in the original shader tree is represented in the phenomenon file, load and test the shaders. It is very likely to encounter syntax errors, as the input and output names can be quite confusing. Testing the mi file node by node is a good way to debug the phenomenon. Testing and building the phenomenon is similar to how a shader is built and tested in hypershade. Below is the full phenomenon file.

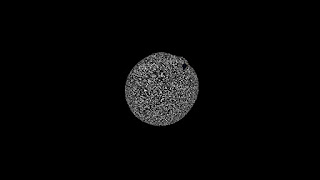

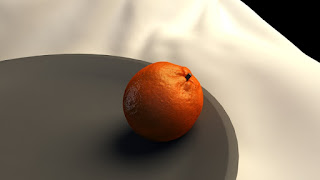

|

| Droplet UI |

Final Droplet Phenomenon

__________________________________________________________

declare phenomenon

color "droplet_phen" (

scalar "diffuse_weight", #:default 0.2

color "reflection_color", #:default 0.9 0.9 0.9

scalar "sm_droplet_cell_size", #:default 0.125 shortname "smdr_size"

scalar "sm_droplet_density", #:default 0.3 shortname "smdr_density"

scalar "sm_droplet_spottyness", #:default 0 shortname "smdr_spot"

scalar "lg_droplet_cell_size", #:default 0.6 shortname "lgdr_size"

scalar "lg_droplet_density", #:default 0.1 shortname "lgdr_density"

scalar "mask_frequency", #:default 13

scalar "mask_time", #:default 0

vector "mask_bias", #:default 0.6 0.6 0.6

scalar "bump_depth", #:default 0.5

transform "sm_droplet_placement",

transform "lg_droplet_placement",

transform "mask_placement"

)

shader "small_droplets" "maya_leather" (

"cellColor" 1. 1. 1.,

"creaseColor" 0. 0. 0.,

"cellSize" = interface "sm_droplet_cell_size",

"density" = interface "sm_droplet_density",

"spottyness" = interface "sm_droplet_spottyness",

"randomness" 1,

"threshold" 0.6,

"creases" 0,

"filter" 0,

"filterSize" 0. 0. 0.,

"filterOffset" 0,

# "blend",

"wrap" 1,

"invert" 0,

"alphaIsLuminance" 0,

"colorGain" 1. 1. 1.,

"colorOffset" 0. 0. 0.,

"alphaGain" 1,

"alphaOffset" 0,

"defaultColor" 0.5 0.5 0.5,

"placementMatrix" = interface "sm_droplet_placement",

"local" 0

)

shader "large_droplets" "maya_leather" (

"cellColor" 1. 1. 1.,

"creaseColor" 0. 0. 0.,

"cellSize" = interface "lg_droplet_cell_size",

"density" = interface "lg_droplet_density",

"spottyness" 0.1,

"randomness" 1,

"threshold"0.80,

"creases" 0,

"filter" 0,

"filterSize" 0. 0. 0.,

"filterOffset" 0,

# "blend",

"wrap" 1,

"invert" 0,

"alphaIsLuminance" 0,

"colorGain" 1. 1. 1.,

"colorOffset" 0. 0. 0.,

"alphaGain" 1,

"alphaOffset" 0,

"defaultColor" 0.5 0.5 0.5,

"placementMatrix" = interface "lg_droplet_placement",

"local" 0

)

shader "small_droplet_mask" "maya_volumenoise" (

"amplitude" 1,

"ratio" 0.809,

"threshold" 0,

"scale" 1. 1. 1.,

"origin" 0. 0. 0.,

"depthMax" 1,

"frequency" = interface "mask_frequency",

"frequencyRatio" 7.252,

"inflection" 0,

"time" = interface "mask_time",

"noiseType" 3,

# "density",

# "spottyness",

# "sizeRand",

# "randomness",

# "falloff",

# "numWaves",

# "implode",

# "implodeCenter",

"filter" 0,

"filterSize" 0. 0. 0.,

"filterOffset" 0,

# "blend",

"wrap" 1,

"invert" 0,

"alphaIsLuminance" 0,

"colorGain" 1. 1. 1.,

"colorOffset" 0. 0. 0.,

"alphaGain" 1,

"alphaOffset" 0,

"defaultColor" 0.5 0.5 0.5,

"placementMatrix" = interface "mask_placement",

"local" 0

)

shader "mask_contrast" "maya_contrast" (

"value" = "small_droplet_mask.outColor",

"contrast" 100. 100. 100.,

"bias" = interface "mask_bias"

)

shader "mib_color_mix1" "mib_color_mix" (

"num" 2,

"mode_0" 2,

"mode_1" 0,

"mode_2" 0,

"mode_3" 0,

"mode_4" 0,

"mode_5" 0,

"mode_6" 0,

"mode_7" 0,

"weight_0" = "mask_contrast.outValueX",

"weight_1" 1,

"weight_2" 1,

"weight_3" 1,

"weight_4" 1,

"weight_5" 1,

"weight_6" 1,

"weight_7" 1,

"color_0" = "small_droplets.outColor",

"color_1" = "large_droplets.outColor",

"color_2" 0. 0. 0.,

"color_3" 0. 0. 0.,

"color_4" 0. 0. 0.,

"color_5" 0. 0. 0.,

"color_6" 0. 0. 0.,

"color_7" 0. 0. 0.,

"color_base" 0. 0. 0.

)

shader "bump3d1" "maya_bump3d" (

"normalCamera" 0. 0. 1.,

"bumpValue" = "mib_color_mix1.outColorR",

"bumpDepth" = interface "bump_depth",

"bumpFilter" 1.,

"bumpFilterOffset" 0.,

"tangentUCamera" 1. 0. 0.,

"tangentVCamera" 0. 1. 0.

)

shader "misss_set_normal1" "misss_set_normal" (

"normal" = "bump3d1.outNormal",

"space" 1,

"add" 0

)

shader "mia_material1" "mia_material" (

"diffuse_weight" = interface "diffuse_weight",

"diffuse" = "mib_color_mix1.outColor",

"diffuse_roughness" 0.,

"reflectivity" = "mib_color_mix1.outColorR",

"refl_color" = interface "reflection_color",

"refl_gloss" 1.,

"refl_gloss_samples" 8,

"refl_interpolate" off,

"refl_hl_only" off,

"refl_is_metal" off,

"transparency" 0.,

"refr_color" = "mib_color_mix1.outColor",

"refr_gloss" 1.,

"refr_ior" 1.52,

"refr_gloss_samples" 8,

"refr_interpolate" off,

"refr_translucency" off,

"refr_trans_color" 0.7 0.6 0.5 1.,

"refr_trans_weight" 0.5,

"anisotropy" 1.,

"anisotropy_rotation" 0.,

"anisotropy_channel" -1,

"brdf_fresnel" off,

"brdf_0_degree_refl" 0.2,

"brdf_90_degree_refl" 1.,

"brdf_curve" 5.,

"brdf_conserve_energy" on,

"intr_grid_density" 2,

"intr_refl_samples" 2,

"intr_refl_ddist_on" off,

"intr_refl_ddist" 0.,

"intr_refr_samples" 2,

"single_env_sample" off,

"refl_falloff_on" off,

"refl_falloff_dist" 0.,

"refl_falloff_color_on" off,

"refl_falloff_color" 0. 0. 0. 1.,

"refl_depth" 5,

"refl_cutoff" 0.01,

"refr_falloff_on" off,

"refr_falloff_dist" 0.,

"refr_falloff_color_on" off,

"refr_falloff_color" 0. 0. 0. 1.,

"refr_depth" 5,

"refr_cutoff" 0.01,

"indirect_multiplier" 1.,

"fg_quality" 1.,

"fg_quality_w" 1.,

"ao_on" off,

"ao_samples" 16,

"ao_distance" 10.,

"ao_dark" 0.2 0.2 0.2 1.,

"ao_ambient" 0. 0. 0. 1.,

"ao_do_details" on,

"thin_walled" off,

"no_visible_area_hl" on,

"skip_inside_refl" on,

"do_refractive_caustics" off,

"backface_cull" off,

"propagate_alpha" off,

"hl_vs_refl_balance" 1.,

"cutout_opacity" 1.,

"additional_color" 0. 0. 0. 1.,

"no_diffuse_bump" off,

"bump" = "misss_set_normal1.normal",

"mode" 4,

"lights" []

)

shader "dropletSurface" "maya_surfaceshader" (

"outColor" = "mia_material1.outValue",

"outTransparency" 0. 0. 0.,

"outMatteOpacity" 1. 1. 1.,

"outGlowColor" 0. 0. 0.

)

shader "shadingEngine" "maya_shadingengine" (

"surfaceShader" = "dropletSurface.outColor",

"cutAwayOpacity" 0.,

"alphaMode" 0

)

root = "shadingEngine"

version 1

apply texture, material

end declare